A key selling point of public cloud computing is that you “pay only for what you use”. For many Microsoft Azure resources, this can be easily accomplished via the Azure Portal. For example, auto-scaling of scale sets can be configured through the Azure portal to adjust instances to match a load or schedule. For other Azure resources or more complex workloads, it is more involved. You will need to write code to provide the intended capability. In this example we will explore automating the deployment of resources needed to complete acceptance testing.

Scenario

You are leveraging an Azure SQL Elastic Pool for your production databases. To test rollouts of new functionality you need an exact copy of the production database environment. You also need to minimize cost.

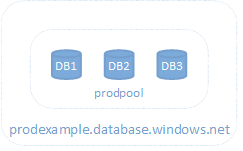

Production Resources

SQL Servers

- database.windows.net

SQL Elastic Pool

- prodpool

SQL Databases

- db1

- db2

- db3

Solution

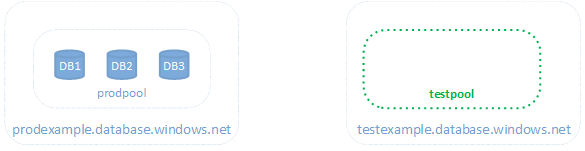

The approach is to create the testing environment at the moment it is needed. It will be an exact copy of the running production. An Azure SQL server will be created and remain in place to ensure the name remains the same (and therefore SQL connection strings won’t have to be updated before completing tests). There is no cost for a SQL Server with no databases, so costs will only be incurred during the actual testing.

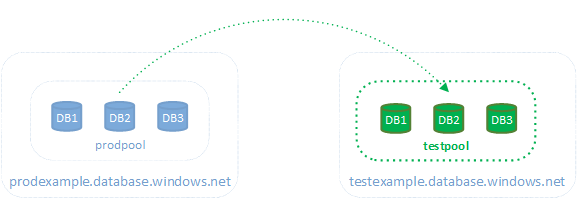

Each time the test environment is needed, a PowerShell script will be run to:

- Create a SQL Server elastic pool named testpool on testexample.database.windows.net

- Copy each database from prodexample.database.windows.net to the testpool SQL Server elastic pool on testexample.database.windows.net

The script leverages the Microsoft Azure PowerShell module, and goes through the following steps

- Get information on the production elastic pool so we know what size and edition to create for the test pool and what databases to copy

$ProdPool = Get-AzureRmSqlElasticPool -ServerName “prodexample” `

-ResourceGroupName “prod” `

-ElasticPoolName “prodpool”

$ProdPoolDBs = $ProdPool | Get-AzureRmSqlElasticPoolDatabase

- Create the test elastic pool

$TestPool = New-AzureRmSqlElasticPool -ResourceGroupName “testsql” `

-ServerName “testexample” `

-ElasticPoolName “testpool” `

-Edition $ProdPool.Edition `

-Dtu $ProdPool.Dtu

- Copy each database from the production elastic pool to the testing elastic pool

foreach ($DB in $ProdPoolDBs) {

New-AzureRmSqlDatabaseCopy -ResourceGroupName “prod” `

-ServerName “prodexample” `

-DatabaseName $DB.DatabaseName `

-CopyResourceGroupName “testsql” `

-CopyServerName “testexample” `

-CopyDatabaseName $DB.DatabaseName `

-ElasticPoolName “testpool”

}

When the testing is completed, the test environment can be deprovisioned by removing the databases and then removing the test elastic pool.

The result is that you pay nothing until your test environment is needed, and you can provision your test environment as an exact replica of your production environment–in minutes (depending upon number and size of the databases).

Cloud 9 maintains scripts to automate this scenario and many other Azure resource management scenarios. Please contact us at (855) 225-6839 or email to info@cloud9infosystems.com for information on our services and capabilities.

Ken Channon

Principal, Cloud Infrastructure Architect

Cloud 9 Infosystems, Inc.